[AIBenchmarks]: The "Consumer Reports" for AI That We Desperately Needed

Every week, a new AI model drops with a flashy chart claiming to "crush" GPT-4. AIBenchmarks is the free, no-nonsense hub that cuts through the marketing fluff to show you which tests actually matter.

Most developers are tired of cherry-picked stats; this tool aggregates and organizes the real "exams" (like Sweet-Bench for coding or Video-MME for vision) so you can see where these models truly stand. It’s not an AI generator—it’s the map to finding out which AI generators are lying to you.

🎨 What It Actually Does

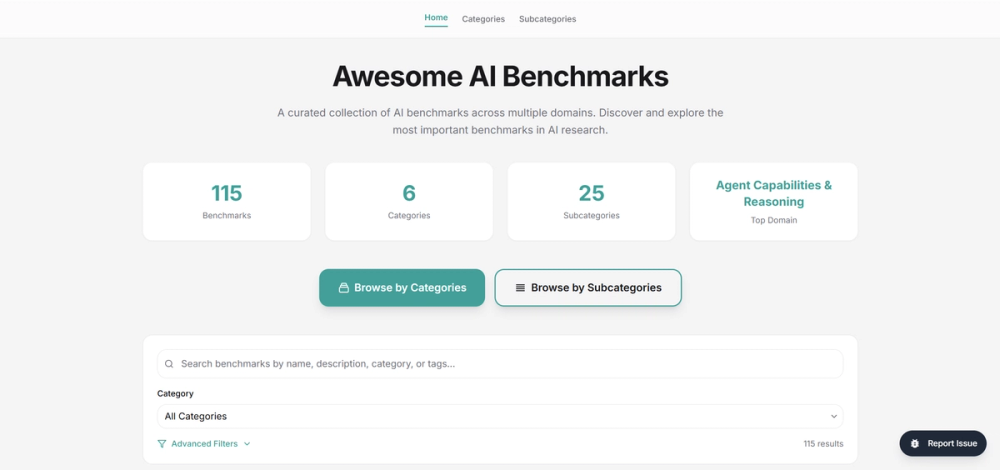

- Centralized Truth: Aggregates 115+ specific benchmarks (e.g., LiveCodeBench, Video-MME) into one searchable dashboard. – Stops you from digging through arXiv papers to find the "real" scores.

- Categorized "Exams": Filters tests by niche like "Programming," "Agent Capabilities," or "Multimodal." – Lets you find the specific test for your specific problem (e.g., "Can this AI actually write Kotlin?" vs. "Can it just chat?").

- Direct Access: Provides direct links to the official Paper, Code, and Live Leaderboard for each benchmark. – Saves you 20 tabs of Googling by giving you the source material instantly.

The Real Cost (Free vs. Paid)

This is the rare case where "Free" actually means free. There is no login, no credit system, and no "Pro" tier hiding the good data. The "cost" is simply your time to navigate to the external leaderboards it links to.

| Plan | Cost | Key Limits/Perks |

|---|---|---|

| Public | $0 | Unlimited access to the directory. No account needed. |

| Pro | N/A | Does not exist. The entire project is open access. |

How It Stacks Up (Competitor Analysis)

While AIBenchmarks is a directory, it competes with other hubs that track AI progress.

- Papers With Code: The academic heavyweight. It has way more data but is dense, ugly, and hard to navigate if you aren't a researcher. AIBenchmarks is cleaner and more practical for devs.

- Hugging Face Open LLM Leaderboard: The raw data hose. Great for seeing a single ranked list of models, but terrible for understanding what the tests actually measure. AIBenchmarks explains the tests themselves.

- LMSYS Chatbot Arena: The "vibe check." It relies on human voting, which is great for "feel" but bad for objective coding accuracy. AIBenchmarks focuses on the hard, technical metrics.

The Verdict

We have entered the "fog of war" phase of the AI boom, where every startup claims supremacy based on obscure metrics. AIBenchmarks is the flashlight. It doesn't tell you which model is "best" generally; it gives you the tools to decide which model is best for your specific task.

In a world drowning in hype, a quiet, well-organized library of facts is the most powerful tool you can have. Bookmark it before you fall for the next viral Twitter chart.